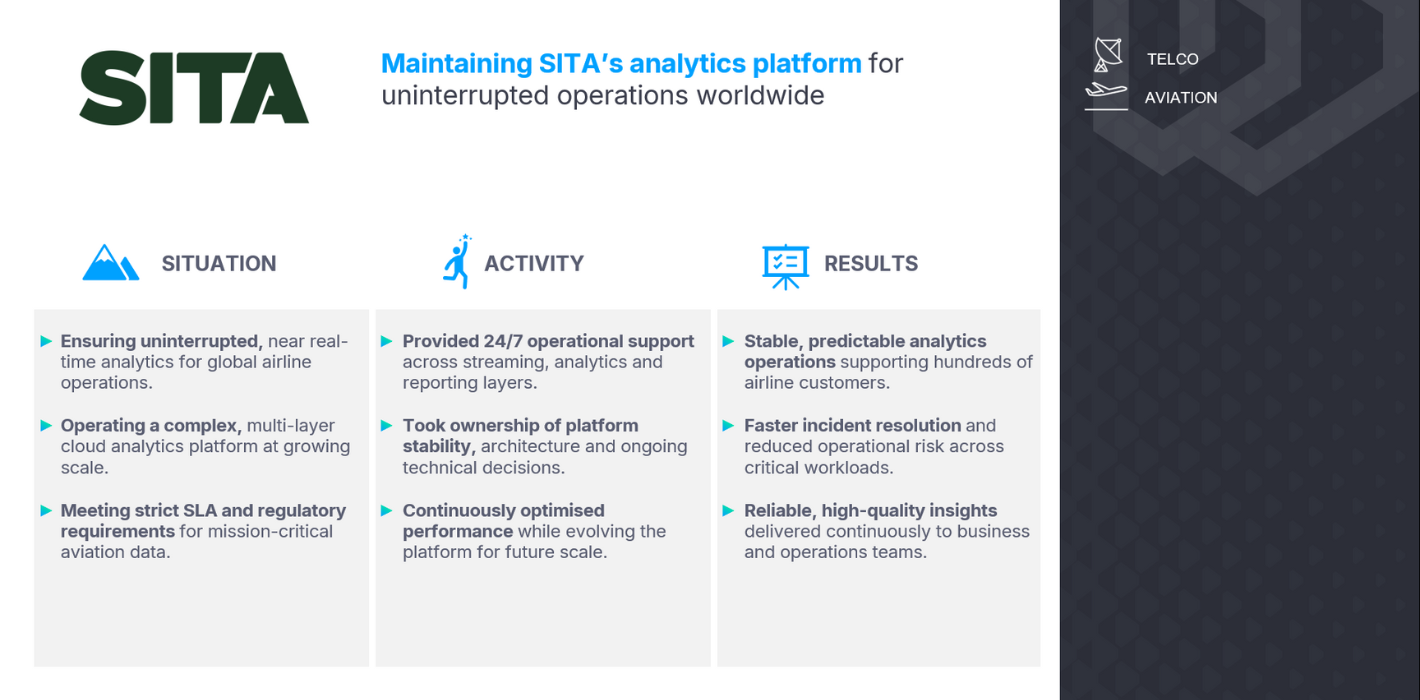

The client

A globally diversified healthcare company with a central purpose to help people live their healthiest possible lives. The organization offers a broad portfolio of market-leading products that align with favorable long-term healthcare trends in both developed and developing markets. With more than 115 000 employees and 4000+ FLMs in 160+ countries discovering new ways to make life better.

The challenge

The organization’s approach to systematic literature reviews was fragmented, manual, and heavily reliant on external vendors, resulting in high costs, inefficiencies, and limited scalability.

- Without an internal solution, the company depended on external vendors and lacked flexibility in customizing search strategies, impacting both costs and operational agility.

- Manual, repetitive processes slowed research and delayed critical decision-making across therapeutic areas, with experts needing to rerun complex searches without automation.

- Subject matter experts spent excessive time on low-value tasks such as literature identification and classification, reducing their focus on interpretive, high-impact work.

- Existing tools could not support multiple research domains simultaneously, limiting the ability to address diverse information needs across projects.

- There was no intelligent system to generate precise search strategies, filter results automatically, or adapt based on user feedback, creating inconsistencies and requiring extensive human intervention.

The scope

The project aimed to develop an internal, scalable solution that would modernize and automate systematic literature reviews while maintaining expert oversight.

- Develop an AI-powered solution utilizing Large Language Models (LLMs) to automate complex literature search processes and support advanced query interpretation.

- Enable generation of precise, context-specific search strategies across multiple biomedical databases such as PubMed.

- Implement automated filtering of relevant references based on customizable, LLM-powered criteria that adapt dynamically to user feedback.

- Provide a user-centric interface that supports expert interaction, feedback collection, and refinement of search results through human-in-the-loop mechanisms.

The solution

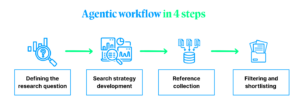

To answer said challenges, the BitPeak team has developed an agentic workflow, powered by LLMs. Our solution automates and optimizes the systematic review literature search process, focusing on significantly reducing costs, improving efficiency, and empowering subject matter experts. The solution was inspired by the methodology outlined in the research paper „A 24-step guide on how to design, conduct, and successfully publish a systematic review and meta-analysis in medical research”

Step 1: Define research question: LLMs convert research questions into structured PICO statements, creating a clear foundation for reviews. (Population, Intervention, Comparator, Outcome)

Step 2: Develop search strategy: Using PICO, LLMs generate and validate MeSH terms via APIs (e.g., PubMed). LangGraph expands synonyms, removes duplicates, and builds a complete search term list.

Step 3: Collect references: The finalized search terms query literature databases, supporting interactive refinement and filtering within the application.

Step 4: Filter and shortlist: LLMs apply inclusion and exclusion criteria, assessing relevance through structured prompts to ensure high-quality results.

AI solution with human supervision

Each step was implemented in Python with a Gradio interface, enabling experts to monitor, approve, and refine results throughout the workflow. The human-in-the-loop approach was enhanced using LangGraph to build a graph-based agentic workflow.

The final output provided a clear, actionable Excel summary of references, showing included and excluded results from each step. The solution also allows reprocessing the same research question in the future, letting users modify or expand search strategies as needed.

To reach our goal, we used the following tech stack:

- LangChain – to structure LLM workflows, integrate Azure OpenAI models with Python, and enable modular task execution.

- LangGraph – to build graph-based agentic workflows for advanced orchestration of LLM-driven tasks.

- Gradio – to create simple, interactive user interfaces and connect frontend components with backend services.

- FastAPI – to build efficient and scalable APIs for backend communication.

- Azure OpenAI – to provide scalable, secure access to deployed large language models used across the solution.

- MySQL – to store and manage references, metadata, and intermediate results.

- Docker – to containerize the application for consistent deployment and development environments.

- AWS Elastic Container Service (ECS) – to deploy and run Docker containers in a scalable and reliable cloud environment.

- AWS CodeBuild – to enable continuous integration and automate build processes during development.

Benefits:

Operations

The solution reduced the average turnaround time for a single research question from 12 days (when outsourced) to just 2 hours, dramatically accelerating the systematic review process. This improvement enabled faster decision-making, shortened project timelines, and significantly lowered costs associated with external vendors.

Medical managers

By automating repetitive tasks such as PICO generation, search strategy design, and reference filtering, the solution allowed subject matter experts to focus on high-impact work. The tool outperformed manual reviews, retrieving 41 relevant references compared to 22 found by experts – delivering both time savings and improved accuracy.

Product developers

By accelerating research workflows, the tool also supported faster product development and enhanced market responsiveness. Endorsements from Medical Writing Agencies and Key Opinion Leaders confirmed the solution’s completeness and usability, boosting internal confidence in the organization’s AI-driven capabilities.

(+48) 508 425 378

(+48) 508 425 378 office@bitpeak.pl

office@bitpeak.pl