Databricks is much more than just a platform for large-scale analytics – it provides a versatile environment that enables solving complex data challenges across industries. At BitPeak, we leverage its capabilities to tailor solutions that meet the specific needs of our clients and their business contexts. Discover how we put Databricks to work in real-world projects.

Over time, we’ve used Databricks to address a wide range of technical challenges – from unifying fragmented data sources and enforcing fine-grained access controls to building real-time pipelines and automating machine learning workflows. Each case required balancing flexibility with control and adapting Databricks’ features to the reality of enterprise-scale environments. The following examples highlight key patterns and solutions that demonstrate the platform’s versatility in action.

Metadata-driven ELT and ETL frameworks

Building scalable and maintainable data pipelines often involves balancing flexibility with standardization. As data volumes grow and systems diversify, manually implementing ingestion and transformation logic for every source becomes inefficient and error prone. To address this, we’ve developed metadata-driven ELT and ETL frameworks (depending on the transformation stage) across several clients, like Santander, Inter Cars or LOT.

Importantly, our frameworks operate across the entire medallion architecture, supporting end-to-end traceability, reusability, and governance. When loading data from external sources, we use an ELT approach to ingest raw datasets into Delta Lake, extracting them from source systems (or landing zone), loading into the Bronze layer, and applying lightweight, metadata-driven transformations to finally reach Silver layer. For more complex logic, such as business rule enforcement or aggregation, we apply ETL patterns to move curated data from Silver to Gold. Both patterns rely on metadata stored in configuration layers to drive orchestration logic across batch and streaming pipelines.

Streaming ingestion of logs and CDC records

Efficiently integrating streaming data into a lakehouse architecture presents challenges related to event ordering, schema variability, and processing guarantees. This is especially true for semi-structured log data and Change Data Capture (CDC) events sourced from operational databases – both of which need to be ingested continuously and reliably.

In one implementation for Inter Cars, we handled real-time ingestion of online process logs to support monitoring and operational decision-making. In another case, we captured CDC streams from multiple heterogeneous databases using Debezium and Kafka – delivering the data to Delta Lake for further transformation. The streaming pipelines were designed to propagate through the entire medallion architecture, supporting analytics and machine learning models with up-to-date, curated outputs.

Customer data anonymization mechanism aligned with GDPR

Databricks is not just a platform for large-scale analytics – it can also be tailored to meet the specific regulatory and operational demands of various industries. In banking, one of the most critical areas is data governance, where compliance with regulations such as GDPR is mandatory. Strict control over data access, retention, and deletion is not only the best practice but a legal obligation.

At Santander Bank Polska, we developed a mechanism to handle customer data deletion requests under the right to be forgotten. Databricks platform triggers workflows that trace and delete or anonymize all related PII records across the lakehouse. These operations are integrated directly into the data pipelines, ensuring full auditability while preserving the integrity of other data products.

Implementing software engineering best practices with Databricks Asset Bundles

To improve consistency, reusability, and auditability in our data and AI projects, we use Databricks Asset Bundles (DAB) as a foundation for managing infrastructure and code. DAB allows us to describe Databricks resources – like jobs, notebooks, pipelines, and ML models – entirely as code, versioned in Git and deployed automatically across environments. This approach not only aligns well with DevOps principles, supporting automation, code review, and reproducible deployments for data workflows, but also provides strong support for MLOps practices, allowing machine learning pipelines, model training, and serving endpoints to be defined, versioned, and promoted through environments using the same declarative structure.

Each bundle contains all project assets: infrastructure definitions, notebooks and Python modules, Delta Live Tables pipelines, model endpoints, MLflow components, and unit/integration tests. This enables us to follow modern software engineering practices – including code review, CI/CD, and automated testing – while ensuring full traceability. DAB relies on Terraform and the Databricks CLI under the hood, giving teams local control and repeatable deployments from development to production.

Accelerating prototyping with Databricks AutoML

When exploring a new dataset, it’s often hard to tell upfront whether it has real predictive potential. Instead of spending days building models from scratch, teams need a way to quickly check what’s feasible.

That’s where we turn to Databricks AutoML – a tool that lets us upload raw data and automatically generate baseline models, complete with performance metrics and explainability reports. It takes care of feature selection, model training, and tuning, so we can focus on evaluating results. The generated notebooks aren’t a black box either – they’re production-grade and easy to tweak. This speeds up the discovery phase and gives us a solid starting point for building more advanced, customized solutions.

Discover more BitPeak Databricks implementations

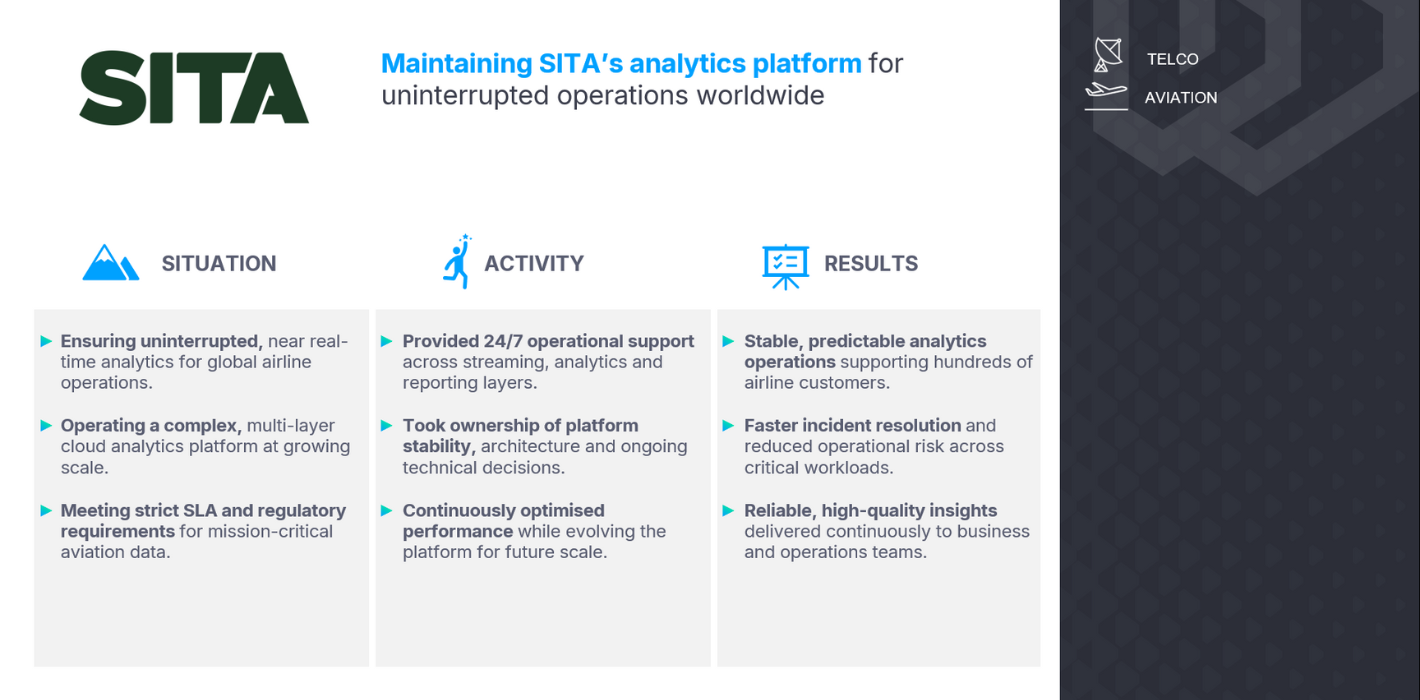

If this article sparked your interest, be sure to explore case studies section on our web page. You’ll find examples of how we apply Databricks across different challenges and domains. From these, you’ll learn how we implemented Unity Catalog in the Santander project, helping structure access controls across multiple teams while maintaining strict regulatory compliance. You’ll also see how, in our work for SITA, we isolated training and operational data, supporting safe and transparent development of AI models. Other examples include scalable, metadata-driven pipelines built for Inter Cars – improving forecasting and inventory reliability across thousands of product lines.

All content in this blog is created exclusively by technical experts specializing in Data Consulting, Data Insight, Data Engineering, and Data Science. Our aim is purely educational, providing valuable insights without marketing intent.

(+48) 508 425 378

(+48) 508 425 378 office@bitpeak.pl

office@bitpeak.pl